This year’s Canadian Evaluation Society (CES) conference was held in Calgary, Alberta and had a theme of Co-Creation. As always, I had a great time connecting with old friends and making new ones, learning a lot, and getting to share some of my own learnings too.

As I usually do at conferences, I took a tonne of notes, but for this blog posting I’m going to summarize some of my insights, by topic (in alphabetical order) rather than by session as I went to some different sessions that covered similar things. Where possible, I’ve included the names of people who said the brilliant things that I took note of, because I think it is important to give credit where credit is due, but I apologize in advance if my paraphrasing of what people said is not as elegant as the way that people actually said them. Anything in [square brackets] is my thoughts that I’ve added upon reflection on what the presenter was talking about.

Complex Systems

- I didn’t see as many things about complexity as I usually do at evaluation conferences.

- No one person has their mind around a complex system. You need all the people in the room to understand it. Systems are messy because people are messy. (Patrick Field)

Environment

- How do we get past the view that humans are supreme beings and the environment is just there to serve us (vs. a stewardship view)? This view is deeply embedded in our identities. Even the legal system is set up to prioritize making money (and views environmentalism as a “nuisance”) (Jane Davidson)

- People have a fear of being evaluated on things they don’t (feel they) have control over “don’t evaluate me on sustainability stuff! It’s affected by so much else!” Looking at outcomes that are outside the control of the program isn’t meant to be about evaluating a program/organization on their performance, but about identifying the things that are constraining them from achieving the outcomes they are trying to achieve. It’s not though if you only look at the things within the box of your program, you can really control all of the things in the box and they aren’t affected by the things outside your program. [No program is a closed system]. (Jane Davidson)

- We focus on doing evaluations to meet the client’s requests, and maybe we stretch it to cover some other things. Sometimes you can slip in stuff that the client didn’t ask for but then you can use that to demonstrate the value of it. People often limited by what they ask for to what they think is possible and sometimes you need to be able to demonstrate the possibilities first (Jane Davidson)

- It’s not just about asking “how good were the outcomes”, but “how good was this organization in making the trade offs?”(Jane Davidson)

Evaluation Approaches and Methods

- People limited their questions to what they think can be measured (e.g., I want to see indicator X move by Y%). When clients say “we can’t measure that!”, Jane tells them “Look there are academics who spent their love life studying “love”. If they can do that, we can find a way to measure what you are really interested in. And it doesn’t have to be quantitative!” The client isn’t a measurement expert and they shouldn’t be limiting their questions to what they think can be measured. (Jane Davidson)

- Once upon a time, evaluation was about “did you achieve your objectives?” but now we also think about the side effects too! (Jane Davidson).

Evaluation

- Bower & Elnitsky talked about having to distinguish between evaluation/quality imrpovement/data collection/clinical indicators/performance indicators (and how, in their view, these aren’t different things) and to talk to their client about how evaluation adds value. This struck a chord with me as it was similar to some of the things that my co-authors and I talk about in a paper we currently have under review in the Canadian Journal of Program Evaluation.

- Sarah Sangster felt that evaluation is like research, but more. She described how evaluation requires all the things you need to do research, but also has some things that research doesn’t (e.g., some evaluation-specific methods). She talked about how ways that people sometimes try to differentiate evaluation and research are really shared (e.g., evaluation is often defined as referring to judging “merit/value/worth”, but that research does that too (e.g., research judges the “best treatment”). [Some of the things she talked about were things that my co-authors and I grappled with in our paper – such as how research is a lot more varied than people typically give it credit for (e.g., participatory action research or community-based research stretch the boundaries are traditional research in that the questions being explore come from community instead of from the researchers and the results are specifically intended to be applied in the community rather than just being knowledge for knowledge’s sake).

Evaluation Competencies

- The CES is updating its list of evaluation competencies – those things a person should know and be able to do in order to be a competent evaluator. The evaluation competencies are used by the society to assess applicants for the Credentialed Evaluator designation – people have to demonstrate that they’ve met the competencies. The competencies are being revised and updated and the committee is taking comments on the draft until June 30, 2018. They expect to finalize the new competencies in Sept 2018.

Evaluation Ethics

- The CES is also looking at renewing its ethics statement, which hasn’t been updated in 20 years! I went to a session where we looked at the existing statement and it clearly needs a lot of work. The society is currently doing an environmental scan (e.g., looking at other evaluation societies’s ethics guidelines/principles/codes/etc.) and consultations with stakeholders (e.g., the session I attended at the conference) and plan to have a decision by the fall if they are going to just tweak the existing statement or completely overhaul it. They hope to have a finished product to unveil at next year’s CES conference.

- During the session that Alec from my team led, which was a lighting round table where people circled through various table discussions, one of the things we talked about while discussing doing observations was ethics. For example, when we are doing observations, it is understood that if you are in a public place, you might be observed and it is ethics to observe people. The question arose “are hospitals public places?”

Evaluation, Use Of

- There was a fascinating panel of 3 mayors who were invited to the conference to talk about what value evaluation can add for municipalities. None of the mayors had even heard of the Canadian Evaluation Society prior to being invited to the conference, so we definitely have our work cut out for us in terms of advocating for evaluation at the municipal level. There is definitely lots of evaluation work that can be done at the municipal level and it would be worthwhile for the society to educate municipal politicians about what we do and how it can help them. The mayors were open to the idea of using evaluation findings in their decision making. There was a suggestion that there should be a panel of evaluators at the Canadian municipalities conference, just like we had the mayors’ panel at our evaluator conference, and I seriously hope the CES pursues this idea.

Evaluation as intervention

- Evaluators affect the things they evaluate. The act of observing is well known to affect the behaviour of those being observed. As well, we know that “what gets measured gets managed,” so setting up specific indicators that will be measured will cause people to do things that they might not otherwise have done. This is an important thing that we should be discussing in our evaluation work.

Indigenous Evaluation

There was a lot of discussion around indigenous populations and indigenous evaluation, in keep with the CES’s commitment “to incorporating reconciliation in its values, principles, and practices.”

- The opening keynote on Reconciliation and Culturally Responsive Evaluation was introduced with “You will feel uncomfortable and that is by design. Ask yourself why it makes you uncomfortable.” [The history – and present – of indigenous people is a hard thing to grapple with for many reasons. There are so many injustices that have been done – and continue to be done – and each of us participates in a system that perpetuates that injustice. Doing nothing about it is to do harm. And for those of us who are not indigenous, there can be a mixture of ignorance about our own history and ignorance about our actions and inactions that contribute to the injustice, privilege, and lack of knowing what we can do that can all contribute to this discomfort.]

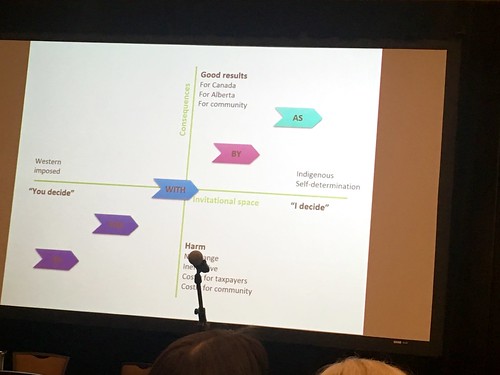

- Several of the people speaking about indigenous evaluation talked about the need for indigenous-led evaluation. We have a long history of evaluation and research in indigenous communities being led by non-indigenous people where they take from the community, don’t contribute to the community, don’t ask the questions that the community needs answered, don’t understand things from the communities’ perspectives, impose Western view/perspectives/model, and then leave the community no better off than before.

- “Scientific colonialism” colonial powers export raw data from communities to “process it”. (Nicole Bowman)

- Despite all the evaluation that has been going on for years, we still face all the same problems – maybe worse. (Kate McKegg)

- “How do we ensure evaluation is socially justi, as well as true, that it attends to the interests of everyone in society and not solely the privileged” (House, 1991 – cited by Kate McKegg).

- “Culturally-responsive evaluation seems to be about giving “permission” to colonizers and settlers to do evaluation in indigenous communities” (Kate McKegg).

- “Sometimes the stories we are telling are not the stories that need to be told.” (Larry Bremner) [Larry was talking about the ways in which evaluation can further perpetuate injustice against, and further ignore and marginalize, indigenous people, thorough what we do and do not study.] Is our own working maintaining colonial oppression?

- “Trauma is never far from the surface in indigenous communities.” Larry Bremner.

- Since many aspects of culture and ceremony have been destroyed by colonialism, how are people supposed to heal, as culture and ceremony are ways of – healing? (Nicole Bowman)

- The lifespan of indigenous people is 15 years less than non indigenous people.

- There is a lot of diversity among indigenous people in Canada: 617 First Nations, as well as Inuit and Métis; 60 languages.

- Larry Bremner quoted a few people that he’d worked with: “Everyone is talking about reconciliation, but what happened to the “truth” part?” [in reference to the Truth and Reconciliation commission] and “In my community, reconciliation is about making white people feel less guilty.” [Even work that is supposed to be about dealing with injustice against indigenous people gets turned around to serve white people instead.]

- Understanding our history is needed to understand the legal and policy work in which we live today. Evaluators need to understand authority power. (Nicole Bowman)

- How can non-indigenous people be good allies?

- We have to be clear on our own identities as settlers and colonizers, recognize our privilege. Our identity is shaped by our history and our present. Colonization is still going on and is nonconsensual and designed to benefit the privileged. (Kate McKegg)

- We don’t even know our own history, let alone that of indigenous people.(Kate McKegg)

- It’s not indigenous people’s responsibility to teach us about this – it’s own our job. Only when we understand ourselves can we hear indigenous people. (Kate McKegg)

- Do your homework. Expand your indigenous networks. Undertake relevant professional development. Build relationships. (Nan Wehipeihana)

- Advocate for indigenous-led evaluation – indigenous people evaluating as indigenous people:

- During the opening keynote, an audience member asked how non indigenous people can learn if it’s not indigenous peoples’ responsibility to teach non indigenous people.

- The panelists noted that indigenous people are a small group who first priority is to do work to help their communities – expecting them to educate you is to put a burden on them that is not their responsibility.

- Kate McKegg noted that indigenous people have been trying to talk to non indigenous people for years and we haven’t listened to them. She suggested that we can work with other settlers who want to learn – there is lots available to read, to start.

- Nicole Bowman noted that observation is how we traditionally learned and it is part of science to observe – do some observing.

- Larry pointed out that indigenous people have taught their ways to others before and people have taken their protocols and not used them well – why should they give non indigenous people more tools to hurt indigenous people?

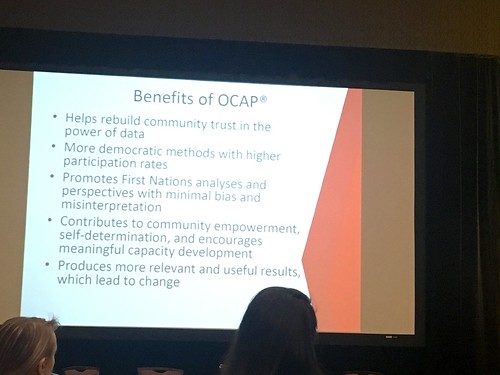

- Lea Bill from the First Nations Information Governance Centre spoke about the OCAP® principles, which refers to Ownership, Control, Access, and Possession of data, in that First Nations have rights to all of these. [I have learned about OCAP® before, but hadn’t realized until I saw this presentation that it was a registered trademark).

- All privacy legislation is about protecting individual privacy rights, but OCAP® is about collective, community rights.

- When you work with indigenous communities, you need to know who the knowledge holders in the community are – they have rights and privileges and if you don’t know, you could offend people and not get good information. (Lea Bill)

- Indigenous indicator are bicultural – all things are interconnected and human beings are not separate from the environment. (Lea Bill)

Language:

- Something I’ve been interested lately is how people from different disciplines use words differently. Two disciplines might use the same word to mean different things, or they might use different words to mean the same thing. One of the sessions I attended was about a glossary that thad been created to clarify words/phrases that are using by financial/accounting people vs. evaluation people. Check out the glossary here.

Reflection:

- I attended a thematic breakfast session that was a live taping of an episode of the Eval Cafe podcast. It was a chance for a group of us to reflect on what we’d learned about at the conference. You can check out the podcast here.

Misc:

- We need to think bigger than binary thinking – “what in our control vs. not in our control?”, “Yes/No”, “Good/Bad”, “Pre/Post”. [Few things are really black or white – often things that are we think of as binary are really more of a gradient or spectrum. There are fuzzy boundaries between things. It’s one of the reasons I like to start questions with “to what extent….” Like “to what extent did the program achieve its goals?”]

To Dos:

- Watch “The Doctrine of Discovery – Unmasking the Domination Code”

- Read “Pagans in the Promised Land” by Steven J. Naucomb

- Research “are hospitals public places?” for the purposes of observations.

Sessions I Attended:

Keynotes:

- Opening Keynote Panel: Reconciliation and Culturally-Responsive Evaluation: Rhetoric or Reality? with panelists Dr. Nicole Bowman, Larry K. Bremner, Kate McKegg, and Nan Wehipeihana

- Keynote with panelists Dr. Jane Davidson, Patrick Field, Sean Curry, Dr. Juha I. Uitto

- Keynote by Lea Bill

- Mayors’ Panel: A municipal perspective on co-cration and evaluation with panelists Heather Colberg, Mayor of Drumheller, Alberta; Mark Heyck, Mayor of Yellowknife, NWT; Stuart Houston, Mayor of Spruce Grove, Alberta

- Fellows Panel – Evaluation for the Anthropocene.

- Closing Keynote Panel: Reflection on Co-Creation Conference 2018 by CES Fellows – Our rapporteurs, realists, and renegades.

Concurrent Sessions:

- Collaborating to improve wait times for a primary care geriatric assessment and support program by Emily Johnston, Krista Rondeau, Kathleen Douglas-England, Bethan Kingsley, Roma Thomson

- Surveying an Under-Represented Population: What We Learned by Surveying Great- Grandma by Kate Woodman, Krista Brower

- Co-Creating Evaluation Capacity in Primary Care Networks: A Case Example of Lessons Learned by Krista Brower, Sherry Elnitsky, Meghan Black

- Evaluators faced with complexity: presentation of the results of a synthesis of the literature by Marie-Hélène L’Heureux

- Knowledge translation and impacts ” unpacking the black box by Ambrosio Catalla Jr, Ryan Catte

- Evaluation and research: Two sides of the same coin or different kettles of fish? by Sarah Sangster, D. Karen Lawson

- Who’s keeping score? A team-based approach to building a performance measurement scorecard by Beth Garner

- Updating the CES Competencies for Evaluators: A Work in Progress by Gail Vallance Barrington, Christine Frank, Karyn Hicks, Marthe Hurteau, Birgitta Larsson, Linda Lee

- Help Us Co-create CES’s Renewal Vision of Ethics in Program Evaluation! by CES Ethics Working Group on Ethics, Environmental Scan and Stakeholder Consultation Subcommittees

- On the Road with the EvalCafe Podcast: Greetings from Calgary! by Carolyn Camman, Brian Hoessler

- Integrating Social Impact Measurement Practice into Social Enterprises: A Sociotechnical Perspective by Victoria Carlan

- From Collaboration to Collective Impact; Measuring Large-scale Social Change by Andrea Silverstone, Debb Hurlock, Tara Tharayil

- The Rosetta Stone of Impact: A Glossary for Investors and Evaluators by David Pritchard, Michael Harnar, Sara Olsen

Presentations I Gave:

- An inside job: Reflections on the practice of embedded evaluation by Amy Salmon, Mary Elizabeth Snow

- How is evaluation indicator development like an orchestra? by Mary Elizabeth Snow, Alec Balasescu, Joyce Cheng, Allison Chiu, Abdul Kadernani, Stephanie Parent

- Can co-creation lead to better evaluation? Towards a strategy for co-creation of qualitative data collection tools by Alec Balasescu, Joyce Cheng, Allison Chiu, Abdul Kadernani, Stephanie Parent, Mary Elizabeth Snow

Pingback: Evaluator Competencies Series: Ethics | Dr. Beth Snow