I had the good fortune of being able to the attend the 35th annual conference of the Canadian Evaluation Society that was held at the Ottawa Convention Centre from June 16-18, 2014. I’d only been to one CES conference previously, when it was held in Victoria, BC in 2010, and I was excited to be able to attend this one as I really enjoyed the Victoria conference, both for the things I learned and for connections I was able to make. This year’s conference proved to be just as fruitful and enjoyable as the Victoria one and I hope that I’ll be able to attend this conference more regularly in the future.

Disappointingly, the conference did not have wifi in the conference rooms, which made the idea of lugging my laptop around with me less than appealing (I’d been intending to tweet and possibly live blog some sessions, but without wifi, my only option would have been my phone and it’s just not that easy to type that much on my phone). So I ended up taking my notes the old fashioned way – in my little red notebook – and will just be posting highlights and post conference reflections here 1Which, in truth, is probably better for any blog readers than the giant detailed notes that would have ended up here otherwise!.

Some of the themes that came up in the conference – based on my experience of the particular sessions that I attended, were:

- The professionalization of evaluation. The Canadian Evaluation Society has a keen interest in promoting evaluation as a profession and has created a professional designation called the “Credentialed Evaluator” which allows individuals with a minimum of two years of full-time work in evaluation and at least a Master’s degree, to complete a rigorous process of self-reflection and documentation to demonstrate that they meet the competencies necessary to be an evaluator. Upon doing so, one is entitled to put the letters “CE” after their name. Having this designation distinguishes you as qualified to do the work of evaluation – as otherwise, anyone can call themselves an evaluator – and so it can help employers and agencies wishing to hire evaluators to identify competent individuals. I am proud to say that I hold this designation – one of only ~250 people in the world at this point. At the conference there was much talk about the profession of evaluation – in terms of CES’s pride that they created the first – and practically only 2Apparently there is a very small program for evaluation credentialing in Japan, but it’s much smaller than the Canadian one. – of this type of designation in the world, as well as distinguishing between research and evaluation 3Which is a very hot topic that leads to many debates, which I’ve experienced both at this conference and elsewhere..

- Evidence-based decision making as opposed to opinion-based policy making or “we’ve always done it this way” decision making 4Or, as a cynical colleague of mine once remarked she was involved in: decision-based evidence making.. This brought up topics such as: the nature of knowledge, what constitutes “good” or “appropriate” evidence, the fallacy of the hierarchy of evidence 5Briefly, there is a hierarchy of evidence pyramid that is often inappropriately cited as being an absolute – that higher levels of the hierarchy are absolutely and in all cases better than lower levels – as opposed to the idea that the “best” evidence depends on the question being asked, not to mention the quality of the specific studies (e.g., a poorly done RCT is not the same as a properly done RCT). I’ve also had this debate more than once..

- Supply side and demand side of evaluation.The consensus I saw was that Canada is pretty good at the supply side – evaluators and providing professional development for them – but could stand to do more work on the demand side – getting more decision makers to understand the importance of evaluations and the evidence they can provide to improve decision making.

- “Accountability vs. Learning” vs. “Accountability for Learning”. One of the purposes for evaluation is accountability – to demonstrate to funders/decision makers/the public that a program is doing what it is intended to do. Another purpose is to learn about the program, with the goal of, for example, improving the program. But some of the speakers at the conference talked about re-framing this to be about programs being “accountable for learning”. A program manager should be accountable for noticing when things aren’t working and for doing something about it.

- If you don’t do it explicitly, you’ll do it implicitly.This came up for me in a couple of sessions. First, in a thematic breakfast where we were discussing Alkin & Christie’s “evaluation theory tree” , which categorizes evaluation theories under “use,” “methods” or “valuing”, we talked about how each main branch was just an entry point, but all three areas still occur. For example, if you are focused on “use” when you design your evaluation (as I typically do), you still have to use methods and there are still values at play. The question is, will you explicitly consider those (e.g., ask multiple stakeholder groups what they see as the important outcomes, to get at different values) or will you not (e.g., you just use the outcomes of interest to the funder, thereby only considering their values and not those of the providers or the service recipients)? The risk, then, is that if you don’t pay attention to the other categories, you will miss out on opportunities to make your evaluations stronger. The second time this theme came up for me was in a session distinguishing evaluation approach, design, and methods. The presenter was from the Treasury Board Secretariat who evaluated evaluations conducted in government and noted that many discussed approach and methods, but not design. They still had a design, of course, but without having explicitly considered it, they could easily fall into the trap of assuming that a given approach must use a particular design and discounted the possibility of other designs that might have been better for the evaluation. “Rigourous thinking about how we do evaluations leads to rigourous evaluations.”

One of the sessions that particularly resonated for me was “Evaluating Integration: An Innovative Approach to Complex Program Change.” This session discussed the Integrated Care for Complex Patients (ICCP) program – an initiative focused on integrating healthcare services provided by multiple healthcare provider types across multiple organizations, focused on providing seamless care to those with complex care needs. The project was remarkably similar to one that I worked on – with remarkably similar findings. Watching this session inspired me to get writing, as I think my project is worth publishing.

As an evaluator who takes a utilization-focused approach to evaluation (i.e., I’m doing an evaluation for a specific purpose(s) and I expect the findings to be used for that purpose(s)), I think it’s important to have a number of tools in my tool kit so that when I work on an evaluation I have at my finger tips a number of options of how best to address a given evaluation’s purpose. At the very least, I want to know about as many methods and tools as possible – their purposes, strengths, weaknesses, and the basic idea of what it takes to use the method or tool, as I can always learn about the specifics of how to do it when I get to a situation where a given method would be useful. At this year’s conference, I learned about some new methods and tools, including:

- Tools for communities to assess themselves:

- Community Report Cards: a collaborative way for communities to assess themselves 6The presentation from the conference isn’t currently available online – some, but not all presenters, submitted their slide decks to the conference organizers for posting online – but here’s a link to the general idea of community report cards. The presentation I saw focused on building report cards in collaboration with the community..

- The Fire Tool: a culturally grounded tool for remote Aboriginal communities in Australia to assess and identify their communities’ strengths, weaknesses, services and policies. 7Again, the presentation slide deck isn’t online, but I found this link to another conference presentation by the same group which describes the “fire tool”, in case anyone is interested in checking it out..

- Tools for Surveying Low Literacy/Illiterate Communities:

- Facilitated Written Survey: Options are read aloud, respondents provide their answer on an answer sheet that has either very simple words (e.g., Yes, No) or pictures (e.g., frowny face, neutral face, smiley face) on it that they can circle or mark a dot beside. You may have to teach the respondents what the simple words or pictures mean (e.g., in another culture, a smiley face may be meaningless).

- Pocket Chart Voting: Options are illustrated (ideally photos) and pockets are provided to allow each person to put their vote into the pocket (so it’s anonymous). If you want to disaggregate the votes by, say, demographics, you can give different coloured voting papers to people from different groups.

- Logic Model That Allows You To Dig Into the Arrows: the presenters didn’t actually call it that, but since they didn’t give it a name, I’m using that for now. In passing, some presenters from the MasterCard Foundation noted that they create logic models where each of the arrows – which represent the “if, then” logic in logic model is clickable and when you click it, it takes you to a page summarizing the evidence that supports the logic for that link. It’s a huge pet peeve for me that so many people create lists of activities, outputs, and outcomes with no links whatsoever between then and call that a logic model – you can’t have a logic model without any logic represented in it, imho. One where you actually summarize the evidence for the link would certainly hammer home the importance of the logic needing to be there. Plus it would be a good way to test out if you are just making assumptions as you create your logic model, or if there is good evidence on which to base those links.

- Research Ethics Boards (REB) and Developmental Evaluation (DE). One group noted that when they submitted a research ethics application for a developmental evaluation project, they addressed the challenge that REB’s generally want a list of focus group/interview/survey questions upfront, but DE is emergent. To do this, they created a proposal with a very detailed explanation of what DE is and why it is important, and then creating very detailed hypothetical scenarios and how they would shape the questions in those scenarios (e.g., if participants in the initial focus groups brought up X, we would then ask questions like Y and Z). This allowed the reviewers to have a sense of what DE could look like and how the evaluators would do things.

- Reporting Cube.Print out key findings on a card stock cube, which you can put on decision makers desk. A bit of an attention getting way of disseminating your findings!

- Integrated Evaluation Framework[LOOK THIS UP! PAGE 20 OF MY NOTEBOOK]

- Social Return on Investment (SROI) is about considering not just the cost of a program (or the cost savings you can generate), but to focus on the value created by it – including social, environmental, and economic. It seemed very similar to Cost-Benefit Analysis (CBA) to me, so I need to go learn more about this!

- Rapid Impact Evaluation: I need to read more about this, as the presentation provided an overview of the process, which involves expert and technical groups providing estimates of the probability and magnitude of effects, but I didn’t feel like I really got enough out of the presentation to see how this was more than just people’s opinions about what might happen. There was talk about the method having high reliability and validity, but I didn’t feel I had enough information about the process to see how they were calculating that.

- Program Logic for Evaluation Itself Evaluation —> Changes Made —> Improved Outcomes. We usually ask “did the recommendations get implemented?”, but need to ask “if yes, what effect did that have? Did it make things better?” (and more challengingly, “Did it make things better compared to what would have happened had we not done the evaluation?”)

A few other fun tidbits:

- A fun quote on bias: “All who drink of this remedy recover in a short time, except those whom it does not help, who all die. Therefore, it is obvious that it fails only in incurable cases.” -Galen, ancient Greek physician

- Keynote speaker Dan Gardiner mentioned that confidence is rewarded and doubt is punished (e.g., people are more likely to vote for someone who makes a confident declaration than one who discusses nuances, etc.). An audience member asked what he thought about this from a gender lens, as men are more often willing to confidently state something than women. Gardener’s reply was that we know that people are overconfident (e.g., when people say they are 100% sure, they are usually right about 70-80% of the time), so whenever he hears people say “What’s wrong with women? How can we make them be more confident”, he thinks “How can we make men be less confident?”

- a great presentation from someone from the Treasure Board Secretariat provided a nice distinction between:

- evaluation approach: high-lelve conceptual model use din undertaking evaluation in light of evaluation objectives (e.g., summative, formative, utilization-focused, goal-free, goal-based, theory-based, participatory) – not mutually exclusive (you can use more than one approach)

- evaluation design: tactic for systematically gathering data that will assist evaluators in answering evaluation questions

- evaluation methods: actual techniques used to gather & analyze data (e.g., survey, interview, document review)

| approach | strategic | evaluation objectives |

| design | tactical | evaluation questions |

| methods | operational | evaluation data |

- In addition to asking “are we doing the program well?”, we should also ask “are we doing the right thing?” Relevance is a question that the Treasury Board seems to focus on, but I think I haven’t given it much thought. Something to consider more explicitly in future evaluations.

- Ask not just “how can I make my evaluations more useful?” but also, “how can I make them more influential?”

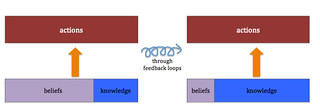

- In a presentation on Developmental Evaluation, the presenter showed a diagram something like this (I drew it in my notebook and have now reproduced it for this blog), which I really liked as a visual:

It shows how we are always making decisions on what actions to take based on a combination of knowledge and beliefs (we can never know *everything*), but we can test out our beliefs, feed that back in, and repeat, and over time we’ll be basing our actions more on evidence and less on beliefs

Footnotes

| ↑1 | Which, in truth, is probably better for any blog readers than the giant detailed notes that would have ended up here otherwise! |

|---|---|

| ↑2 | Apparently there is a very small program for evaluation credentialing in Japan, but it’s much smaller than the Canadian one. |

| ↑3 | Which is a very hot topic that leads to many debates, which I’ve experienced both at this conference and elsewhere. |

| ↑4 | Or, as a cynical colleague of mine once remarked she was involved in: decision-based evidence making. |

| ↑5 | Briefly, there is a hierarchy of evidence pyramid that is often inappropriately cited as being an absolute – that higher levels of the hierarchy are absolutely and in all cases better than lower levels – as opposed to the idea that the “best” evidence depends on the question being asked, not to mention the quality of the specific studies (e.g., a poorly done RCT is not the same as a properly done RCT). I’ve also had this debate more than once. |

| ↑6 | The presentation from the conference isn’t currently available online – some, but not all presenters, submitted their slide decks to the conference organizers for posting online – but here’s a link to the general idea of community report cards. The presentation I saw focused on building report cards in collaboration with the community. |

| ↑7 | Again, the presentation slide deck isn’t online, but I found this link to another conference presentation by the same group which describes the “fire tool”, in case anyone is interested in checking it out. |